Mark Twain famously mentioned, “Historical past doesn’t repeat itself, nevertheless it typically rhymes.” In tech safety, AI is creating a brand new verse that rhymes with Cloud.

Simply over a decade in the past, CISOs tried to ban Dropbox and Google Drive to cease unsanctioned file sharing. That didn’t work. Cloud apps merely went underground—till safety leaders realized that blocking wasn’t the reply. Governance was.

At the moment, AI coding instruments like GitHub Copilot and Amazon Q are the brand new Shadow IT. Builders are utilizing them—typically with approval, however largely with out. And nearly all the time with inadequate oversight or coverage guardrails.

They’re shifting quick. Ignoring it received’t cease the adoption and trusting devoted AI coding instruments and present safety protocols to be ‘safe sufficient’ is a leap of religion CISOs can’t afford.

This text skips the AI hype and will get sensible, offering CISOs and safety leaders with a brass-tacks information to safe AI-generated code on the tempo it’s being written—with real-time IDE scanning, immediate suggestions in Github repos, enforceable governance, and instruments like Checkmarx One.

However first, a fast evaluate of the AI-generated code panorama.

The Actuality of AI Coding Adoption – the Practice Has Lengthy Left the Station

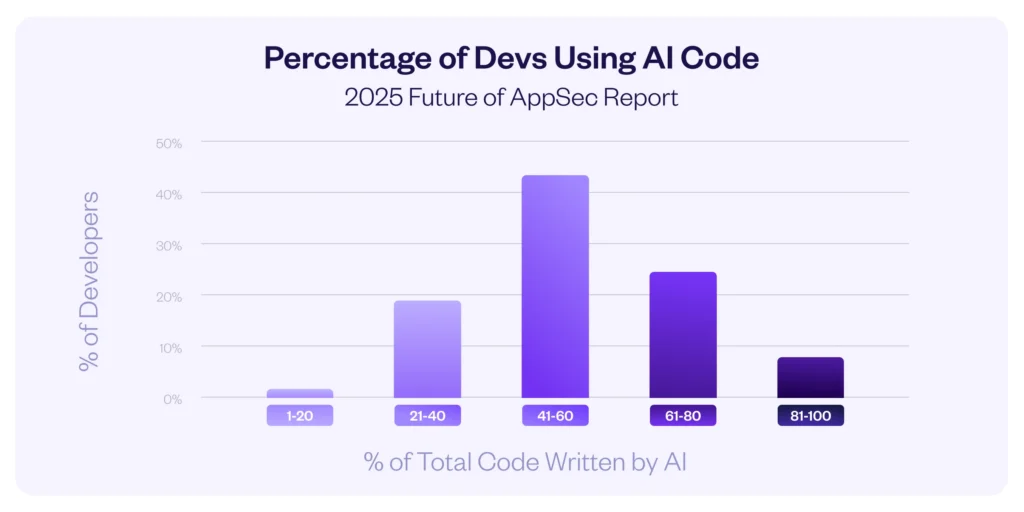

Checkmarx’ upcoming 2025 international survey, carried out with Censuswide, discovered that AI coding instruments have already turn out to be a core a part of trendy improvement workflows.

Throughout CISOs, AppSec managers, and builders, almost 70% of respondents estimated that greater than 40% of their group’s code was AI-generated in 2024, and 44.4% of respondents estimating 41–60% of their code is AI-generated.

This stat is corroborated by the Stack Overflow 2024 Developer Survey, exhibiting that 76% of builders now use AI instruments of their work.

AI-Generated Code – The New Dangerous Norm

AI coding assistants like GitHub Copilot, Gemini Code Help, Cursor, and Amazon Q Developer don’t change built-in safety. They aren’t a substitute for AST testing. Whereas they will make improvement sooner, even the distributors advocate utilizing “automated exams and tooling.”

Counting on AI coding assistants to be secure-by-default falls quick. Amongst different dangers, AI coding assistants probably introduce new dangers similar to hallucinated code or immediate injection, and handbook critiques alone battle with scalability. Their transparency can be restricted, as they supply solely imprecise particulars on their mannequin coaching or AI vulnerabilities.

A 2024 empirical examine on Safety Weaknesses of Copilot-Generated Code in GitHub Initiatives analyzed 733 Copilot-generated snippets from GitHub tasks. It discovered that 29.5% of Python and 24.2% of JavaScript snippets contained safety weaknesses, together with XSS and improper enter validation.

AI-generated code isn’t inherently safer than human-generated code. Simply as human-generated code imposes safety dangers, so does AI. However what’s completely different is the dimensions and pace of AI-generated code, in addition to the psychological elements which result in the shortage of oversight.

Why AI-Generated Code Will get Much less Evaluate and Creates Safety Dangers

As a result of builders won’t absolutely perceive or rigorously evaluate code created by AI, this code might find yourself having extra safety issues and errors in comparison with code written and checked by folks. The way in which AI creates code will be unclear, and it would study from flawed examples. If builders belief AI an excessive amount of and don’t double-check its work, points can simply be missed.

Analysis exhibits AI-generated code typically receives much less cautious checking than human-written code, creating critical safety dangers. Builders really feel much less chargeable for AI-generated code and spend much less time reviewing it correctly.

Analysis additionally exhibits that coders utilizing AI instruments wrote extra insecure code than those that didn’t. This false confidence is made worse as a result of many builders have an unfounded sense of belief in AI-generated code and are much less conversant in the logic behind it. With out correct evaluate processes and specialised instruments for checking AI-generated code, these issues will proceed as builders belief AI an excessive amount of with out verifying its work.

That’s why securing AI-generated code requires a brand new type of technique: one tailor-made to the distinctive challenges it poses.

A CISO’s Technique for Securing AI-Generated Code

To handle the rising complexity and scale of AI-written code, CISOs should implement a layered technique that mixes real-time technical controls with organizational governance.

Governance Controls

Governance controls assist CISOs implement accountable AI adoption at scale by defining boundaries, insurance policies, and shared duties that span improvement, safety, and compliance groups. A few of these governance controls are good practices, even when coping with human-generated code. However they turn out to be much more necessary when AI is added to the combination.

Right here’s what CISOs must be doing:

AI Code Utilization Insurance policies

Set up granular insurance policies to manipulate AI instrument utilization:

- Specifying which AI instruments are permitted, and for what capability.

- Defining acceptable use circumstances (e.g., prototyping vs. manufacturing code).

- Making certain that AI generated code is clearly identifiable.

- Limiting use of AI-generated code in delicate or crucial parts, similar to authentication modules or monetary techniques.

- Mandating peer critiques to make sure high quality and safety

Safety Evaluate Processes

Formalize the evaluate course of. This implies:

- Establishing thresholds for when critiques are required (e.g., all AI-generated code touching delicate techniques or enterprise logic)

- Assigning accountability to educated AppSec reviewers or peer builders, and integrating these critiques into PR and CI/CD workflows

- Defining critiques in a number of locations within the SDLC: Pre-review, following commit, inside CI/CD instruments utilizing a instrument like Vorpal, and many others.

- Aligning critiques to make sure code meets safe coding requirements just like the OWASP Prime 10

- Coaching reviewers on how one can evaluate AI-generated code, and what to search for – going past performance checks and into the inspected code handles inputs, sanitizes information, and manages privilege boundaries

Developer Schooling

Spend money on coaching that goes past basic safe coding rules and focuses on the distinctive dangers posed by GenAI instruments.

Builders ought to perceive how AI fashions generate code, the safety weaknesses they’re vulnerable to introducing, and how one can critically consider AI-generated snippets earlier than integrating them.

This contains recognizing the boundaries of AI recommendations and validating logic paths. To bolster this mindset, organizations can incorporate ongoing, role-specific training into developer workflows via platforms like Checkmarx Codebashing.

Cross-Purposeful Accountability

Construct formal accountability frameworks that unite AppSec, DevOps, and compliance.

This contains setting shared KPIs (like decreasing AI-originated vulnerabilities or enhancing time-to-remediation), sustaining audit trails for the way AI-generated code is reviewed and authorized, and operating common cross-team assessments to trace coverage adherence.

Culturally, it means shifting from siloed enforcement to shared possession, the place builders, too, are conscious of compliance expectations, and safety groups provide collaborative, context-aware steerage.

Technical Controls

Technical and governance controls complement each other. With technical controls, the main target is on automated, scalable options that combine into improvement pipelines.

Implementation ought to leverage present safety instruments, prioritize crucial techniques, and guarantee measurable danger discount with out diving into granular configurations. Under are the principle technical controls:

Automated Safety Testing

AST testing, together with SAST, DAST, API Safety, and SCA are foundational instruments for detecting recognized vulnerabilities in supply code and purposes, insecure dependencies, and misconfigurations throughout the SDLC. Whereas they’re not sufficient on their very own to safe AI-generated code, they continue to be important as a baseline layer of safety in any utility safety technique.

Actual-Time IDE Scanning (AI Safe Coding Assistants)

AI Safe Coding Assistants information builders when working with AI-generated code, by figuring out insecure patterns and recommending safe options in actual time.

They provide contextual recommendations as code is written, serving to builders spot flaws early, earlier than code reaches staging or manufacturing environments.

Actual-time scanning contained in the IDE helps builders flag potential dangers and coding patterns that lack greatest practices. That is helpful for human-generated code, however it’s crucial for AI-generated code.

These instruments present immediate suggestions on quick snippets of code earlier than it’s even dedicated, surfacing dangers like unsafe enter dealing with or insecure defaults.

For builders utilizing GitHub Copilot, ASCA may even generate remediation recommendations, turning AI from only a coding assistant right into a safety accomplice.

Not like SAST, which analyzes total purposes post-commit, IDE scanning focuses on localized code blocks—not changing deep evaluation, however fairly tightening suggestions loops so builders study safe coding practices in real-time.

See ASCA in motion:

Embed code:

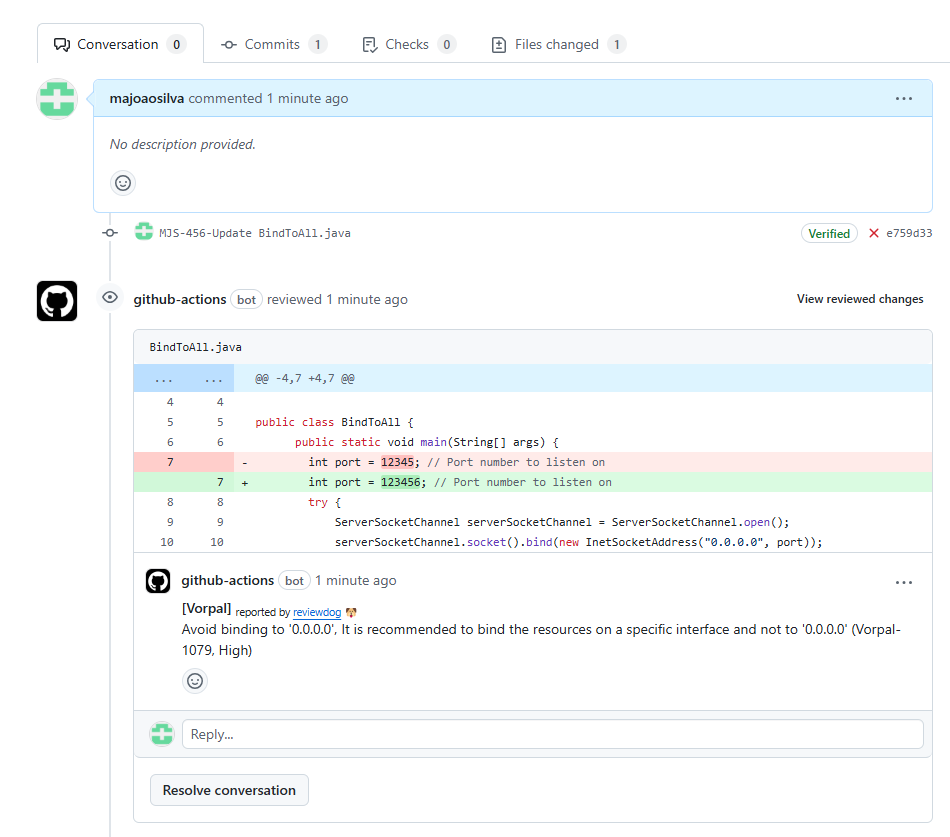

Pre-merge Developer Suggestions

Vorpal, a light-weight GitHub Motion, offers a crucial safety checkpoint on the pull request stage. Appearing because the final line of protection earlier than code enters your predominant department, Vorpal flags violations of safe coding greatest practices with outcomes seen immediately in GitHub’s interface.

Out there as a free GitHub Motion for builders worldwide, Vorpal is especially efficient with AI-generated code, which can seem syntactically appropriate however carry hidden dangers as a result of insecure patterns.

Not like conventional safety gates that gradual improvement, Vorpal integrates seamlessly into present workflows, permitting groups to keep up velocity whereas enhancing safety.

Further AI Instruments

AI instruments will typically recommend insecure open-source packages. SCA identifies a safe bundle. If no different safe bundle exists, Checkmarx can recommend a bundle with related performance.

Safety integration into AI instruments is useful. For instance, Checkmarx One integrates into ChatGPT and GitHub Copilot to routinely scan supply code and determine malicious packages, inside the AI interface itself.

Remoted Execution Environments

Sandboxing or containerization to check AI-generated code in managed environments, restrict the blast radius of potential flaws or malicious logic.

API Safety

APIs are notably delicate to dangers launched by AI-generated code as a result of they’re high-exposure entry factors into crucial techniques. AI instruments would possibly accidently generate code referencing non-existent APIs, misusing authentication flows, or API implementations.

API safety instruments mitigate these dangers by providing automated discovery, site visitors inspection, anomaly detection, and AI-driven exploit prevention.

They assist implement robust authentication (e.g., OAuth, JWT), validate inputs, and block enterprise logic abuse, making them a significant management level for detecting and stopping AI-induced API vulnerabilities.

How Checkmarx One Secures AI-Generated Code

Checkmarx One protects AI-generated code via a layered protection technique that spans your complete improvement lifecycle.

The platform combines foundational AppSec instruments—SAST, DAST, SCA, Secrets and techniques Detection, Malicious Bundle Safety, Container Safety, IaC Safety, and API safety—with AI-specific controls designed for the distinctive challenges of AI-generated code.

What units Checkmarx One aside is its complete strategy to safety: ASCA catches points as builders write code inside the IDE, complete SAST/DAST scans provide deeper evaluation earlier than deployment, and the platform integrates seamlessly with open-source instruments.

For GitHub customers, Checkmarx’s free, open supply Vorpal instrument offers a further safety checkpoint throughout pull requests, complementing the Checkmarx One platform. This multi-layered strategy ensures AI-generated code receives applicable scrutiny at every stage of improvement.

Most significantly, Checkmarx One brings all of it collectively inside a single platform, offering CISOs, AppSec leaders and builders with full visibility and constant enforcement from code creation to deployment.

Safety integration immediately into AI coding instruments represents the latest frontier in utility safety. Checkmarx One now provides integrations with well-liked AI coding assistants, routinely scanning generated code and figuring out safety points with out requiring builders to modify contexts.

These integrations can even assist determine probably malicious packages that AI assistants would possibly recommend, providing safe options with related performance when out there. This strategy meets builders the place they’re—inside their most well-liked AI instruments—fairly than requiring them to undertake one more safety resolution.

Conclusion

AI-generated code is now not an rising problem – it’s the brand new regular. As with all technological breakthrough, it introduces vital advantages and new dangers—each demand consideration. With the appropriate mixture of instruments and governance, CISOs can guarantee their groups embrace the productiveness positive factors of AI coding assistants with out compromising safety.

The organizations that may thrive on this new panorama received’t be those who resist AI-generated code, however those who safe it successfully. Checkmarx One provides a unified strategy to this problem, serving to safety groups maintain tempo with AI-accelerated improvement whereas sustaining strong safety.

Request a demo to see how Checkmarx One helps safe AI-generated code whereas sustaining your improvement velocity.